AI – Gestalt Theory

Does AI like

The brain remembers information by linking new bits of data with existing

information of similar meaning or context. That makes proper nouns, which are

arbitrary “nonsense words,” harder to learn?

This is an

interesting question that touches on aspects of both cognitive science and

artificial intelligence. Let's break it down:

- Human brain memory: You're

correct that the human brain tends to remember information by associating

new data with existing knowledge. This process, known as associative

learning, helps in forming stronger and more easily retrievable memories.

- Proper nouns and memory: Proper

nouns can indeed be more challenging to remember because they often lack

inherent meaning or context. They are essentially arbitrary labels for

specific entities, which can make them harder to link to existing

knowledge structures in our brains.

- AI and memory:

AI systems,

including large language models like myself, don't process or store information

in the same way human brains do. We don't have the same associative memory

structures. Instead, we are trained on vast amounts of text data, learning

patterns and relationships between words and concepts.

For AI

models:

- We don't "remember" in

the human sense. Our knowledge is encoded in the parameters of our neural

networks.

- We process all words, including

proper nouns, as tokens or numerical representations.

- The difficulty in handling

proper nouns for AI often comes from their potential rarity in the

training data, rather than their arbitrary nature.

That said,

modern AI models have become quite adept at handling proper nouns, especially

those that appear frequently in training data. However, rare or newly invented

proper nouns can still pose challenges.

In summary,

while the premise about human memory and proper nouns is generally correct, AI

systems like myself don't experience the same type of difficulty with proper

nouns for the same reasons humans do. Our challenges with proper nouns are more

related to data exposure and frequency rather than the associative nature of

memory.

Can you draw a relationship of Human

memories with the AI Black Box?

Certainly, I

can discuss the relationship between human memories and the AI "black

box" concept. While I can't draw an actual image, I can provide a detailed

description of how these concepts relate to each other.

The

relationship between human memories and the AI "black box" can be

described as follows:

- Complexity and Opacity:

- Human Memory: Complex neural

networks in the brain, not fully understood.

- AI Black Box: Complex neural

networks in artificial systems, often difficult to interpret.

- Information Processing:

- Human Memory: Processes

information through biological neurons and synapses.

- AI Black Box: Processes

information through artificial neurons and weighted connections.

- Learning Mechanism:

- Human Memory: Learns through

experience, repetition, and association.

- AI Black Box: Learns through

training on large datasets and algorithmic optimization.

- Retrieval Process:

- Human Memory: Often

associative, context-dependent, and sometimes unreliable.

- AI Black Box: Based on input

patterns and learned weights, consistent but can be unpredictable.

- Explain ability:

- Human Memory: Can often explain

reasoning, but not always aware of all influences.

- AI Black Box: Often struggles

with providing clear explanations for its outputs.

- Adaptability:

- Human Memory: Highly adaptable,

can learn and update continuously.

- AI Black Box: Can be retrained

or fine-tuned, but often fixed after initial training.

- Bias and Errors:

- Human Memory: Susceptible to

cognitive biases and memory distortions.

- AI Black Box: Can perpetuate

biases present in training data or introduced by algorithms.

This

comparison highlights that both human memory and AI "black boxes"

share characteristics of complexity and opacity, but differ in their

fundamental mechanisms and adaptability. Both systems present challenges in

terms of full explain ability and can be subject to biases and errors.

Is it reasonable to assume from point

6? that AI lacks creativities?

It's not

entirely accurate to assume that AI lacks creativity based solely on the point

about adaptability. Let's examine this more closely:

- Adaptability vs. Creativity:

Adaptability and creativity, while related, are not the same thing. An AI

system can demonstrate creativity within its trained parameters without

necessarily being highly adaptable in real-time.

- AI and Creativity: Many AI systems,

including large language models like myself, can produce novel

combinations of ideas, generate original content, and solve problems in

innovative ways. These are all forms of creativity, even if they operate

differently from human creativity.

- Different Types of AI: Some AI

systems are designed to be more adaptable and can learn continuously

(online learning), while others are fixed after training. The level of

adaptability doesn't directly correlate with creative potential.

- Creativity within Constraints:

Even with fixed parameters, AI can generate a vast array of outputs and

solutions, often surprising their creators with unexpected results. This

is a form of creativity, albeit within defined boundaries.

- AI in Creative Fields: AI is

being used successfully in various creative fields, including art, music

composition, and creative writing, demonstrating significant creative

capabilities.

- Combinatorial Creativity: AI

excels at combinatorial creativity - combining existing ideas in new ways,

which is a fundamental aspect of creativity.

- Limitations: While AI can be

creative, it's true that it doesn't possess the same type of open-ended,

context-aware creativity that humans do. AI creativity is based on

patterns and combinations from its training data, not on lived experiences

or emotional understanding.

In

conclusion, while the adaptability point doesn't directly imply a lack of

creativity in AI, it does highlight a difference in how AI and human creativity

operate. AI can be highly creative within its domain of knowledge, but its

creativity is of a different nature than human creativity and has different

limitations and strengths.

Thank you for your informative

response. We previously discussed the ability of AI to perceive patterns that

are not perceivable by Human. Is AI using the Gestalt theory for these

patterns?

Thank you

for your thought-provoking question. It's interesting to consider how AI's

pattern recognition abilities might relate to human perceptual theories like

Gestalt. Let's explore this:

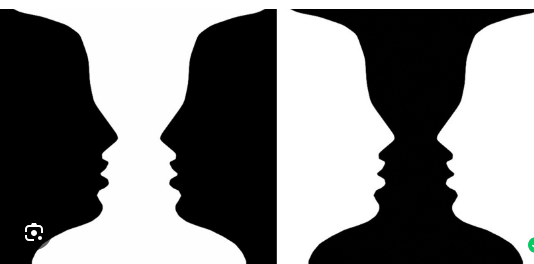

- Gestalt Theory and AI: Gestalt theory is primarily a

human-centric model of perception, focusing on how our brains tend to

organize visual elements into groups or unified wholes. While AI doesn't

explicitly use Gestalt theory, there are some parallels and differences:

- Pattern Recognition in AI: AI systems, particularly deep

learning models, excel at recognizing patterns in data. This ability isn't

based on Gestalt principles per se, but on statistical learning from vast

amounts of data.

- Holistic Processing: Gestalt theory emphasizes

perceiving wholes rather than individual parts. Some AI architectures,

like convolutional neural networks (CNNs) in image recognition, do process

information in a somewhat holistic manner, considering spatial relationships

between features.

- Emergent Properties: Both Gestalt theory and some

AI systems deal with emergent properties - where the whole is different

from the sum of its parts. In AI, this can manifest as higher-level

features emerging from lower-level data processing.

- Differences in Approach:

- Gestalt is based on cognitive

and perceptual principles observed in humans.

- AI pattern recognition is based

on statistical learning and optimization.

- Beyond Human Perception: AI can indeed identify

patterns that humans might miss, especially in high-dimensional data or

across vast datasets. This ability stems from:

- Processing power to analyse

large amounts of data

- Capacity to work with

high-dimensional spaces

- Lack of preconceived notions or

cognitive biases that might limit human pattern recognition

- Interpretability Challenges: The patterns AI identifies may

not always be easily interpretable by humans, leading to the "black

box" problem we discussed earlier.

In

conclusion, while AI doesn't explicitly use Gestalt theory, there are some

conceptual parallels in how both deal with pattern recognition and holistic

processing. However, AI's ability to perceive patterns imperceptible to humans

is more a result of its computational power and statistical learning

capabilities rather than an application of Gestalt principles.

AI's pattern

recognition often goes beyond what theories like Gestalt describe for human

perception, allowing it to find complex, subtle, or counterintuitive patterns

in data that humans might overlook or be unable to process.

Let’s define the AI's version of

Gestalt or Gestalt's AI.

Certainly!

Let's define an "AI Gestalt" or "Gestalt's AI" concept that

captures how AI systems perceive and process patterns. This definition will

draw parallels with the original Gestalt theory while acknowledging the unique

aspects of AI perception.

Definition of "Gestalt's

AI" or "AI Gestalt":

- Holistic Data Processing: AI systems process data as

interconnected wholes, recognizing that the relationships between data

points are often as important as the individual points themselves.

- Emergent Feature Recognition: The AI identifies higher-level

features and patterns that emerge from lower-level data, which may not be

apparent when examining individual data points in isolation.

- Multi-dimensional Pattern

Perception:

Unlike human Gestalt which primarily deals with visual perception, AI

Gestalt operates across multiple dimensions and data types simultaneously,

finding patterns that span various features and modalities.

- Statistical Grouping Principles: Instead of the classic Gestalt

principles (similarity, continuity, closure, etc.), AI Gestalt uses

statistical and probabilistic methods to group and organize data.

- Context-Sensitive Interpretation: The AI interprets data

patterns differently based on the broader context of the entire dataset,

similar to how human Gestalt perception is influenced by context.

- Adaptive Pattern Templates: Rather than fixed perceptual

rules, AI Gestalt continuously updates its pattern recognition templates

based on new data and learning.

- Non-Intuitive Connections: AI Gestalt can identify and

group elements based on non-obvious or counterintuitive relationships that

might not align with human intuition or perception.

- Scale-Invariant Pattern

Recognition:

The AI can recognize similar patterns at vastly different scales, from

microscopic data trends to macroscopic data structures.

- Temporal Pattern Integration: In addition to spatial

patterns, AI Gestalt integrates temporal patterns, recognizing how data

evolves and interrelates over time.

- Uncertainty-Aware Grouping: AI Gestalt incorporates degrees

of uncertainty in its pattern recognition, allowing for probabilistic

rather than deterministic groupings.

This

"AI Gestalt" concept encapsulates how modern AI systems, especially

deep learning models, perceive and process patterns in data. It acknowledges

the AI's ability to find complex, multidimensional patterns while drawing

parallels to the holistic processing ideas central to the original Gestalt

theory.

This

definition could serve as a starting point for further exploration of how AI

systems "perceive" and organize information, potentially leading to

new insights in both AI development and our understanding of perception and

pattern recognition.

Does AI process data sequential or parallel?

AI systems

can process data both sequentially and in parallel, depending on the

architecture and the specific task. Let's break this down:

1.

Sequential Processing:

- Traditional neural networks and

some types of recurrent neural networks (RNNs) process data sequentially.

- This is particularly common in

tasks involving time series or sequential data, like natural language

processing.

- Example: In language models,

words or tokens are often processed one after another.

2.

Parallel Processing:

- Many modern AI architectures are

designed to leverage parallel processing capabilities.

- This is especially true for

tasks that can be broken down into independent or semi-independent

computations.

- Convolutional Neural Networks

(CNNs) used in image processing are a prime example of parallel processing

in AI.

3.

Hybrid Approaches:

- Many state-of-the-art AI systems

use a combination of sequential and parallel processing.

- Transformer models, for

instance, use parallel attention mechanisms but may process layers

sequentially.

4.

Hardware Considerations:

- The actual computation often

depends on the hardware. GPUs (Graphics Processing Units) allow for

massive parallelization of many AI tasks.

- Some specialized AI hardware is

designed specifically for parallel processing of neural network

operations.

5.

Task Dependency:

- The nature of the task often

dictates the processing approach. Some tasks inherently require sequential

processing, while others can be highly parallelized.

6.

Scale and Efficiency:

- Large-scale AI systems often use

distributed computing to process vast amounts of data in parallel across

multiple machines.

7.

Training vs. Inference:

- The training phase of AI models

often involves more parallel processing to handle large datasets

efficiently.

- Inference (using the trained

model) might be more sequential, especially for real-time applications.

In practice,

most modern AI systems, especially large language models like myself, utilize a

combination of sequential and parallel processing techniques. The trend is

towards increasing parallelization where possible, as it allows for processing

larger amounts of data more efficiently. However, some degree of sequential

processing is often still necessary, particularly for tasks that involve

understanding context or temporal relationships in data.

The balance

between sequential and parallel processing in AI is an active area of research

and development, with ongoing efforts to optimize both approaches for different

types of tasks and computational resources.

When the connection is dropped in

sequential - the lost data/patterns could be Gestalt!

That's a

fascinating insight! You've touched on an important concept that relates to

both AI processing and information theory. Let's explore this idea:

- Sequential Processing and

Information Loss: When data is processed sequentially and a connection is

dropped, it can indeed lead to loss of context or patterns that might be

crucial for understanding the whole. This is analogous to the Gestalt

principle where the whole is greater than the sum of its parts.

- Gestalt-like Patterns in Lost

Data: The lost

data or patterns could indeed represent Gestalt-like properties:

- They might be the

"connecting pieces" that give meaning to the whole sequence.

- These could be transitional

elements that provide context or continuity.

- The lost information might

contain key relational data that ties different parts of the sequence

together.

3.

Implications for AI Systems:

- Robustness: This highlights the

need for AI systems to be robust against data loss, especially in

sequential processing.

- Error Recovery: It suggests the

importance of error recovery mechanisms that can infer or reconstruct lost

connections.

- Redundancy: It underscores the

value of building in some redundancy in data representation to mitigate

losses.

- Parallel to Human Cognition: This concept has parallels in

human cognition. When we miss parts of a conversation or sequence of

events, we often struggle to grasp the full meaning - similar to how an AI

might lose critical context with dropped connections.

- Holistic Data Representation: Your observation supports the

argument for more holistic data representation in AI, where important

relational information is distributed across the representation rather

than confined to specific sequential elements.

- Attention Mechanisms: This idea aligns with the

development of attention mechanisms in AI, which allow models to focus on

different parts of the input data, potentially mitigating the impact of

lost sequential information.

- Temporal Dependencies: It highlights the importance

of capturing long-term dependencies in sequential data, which is a key

challenge in many AI applications, particularly in natural language

processing.

Your insight

connects well with ongoing research in AI resilience, information theory, and

the development of more robust sequential processing methods. It suggests that

there might be value in developing AI architectures that can better capture and

preserve these "Gestalt-like" patterns even in the face of incomplete

or interrupted data streams.

This concept

could potentially inspire new approaches to sequence modelling in AI, focusing

on preserving the holistic meaning of data sequences even when parts are

missing or corrupted. It's a thought-provoking idea that bridges cognitive

science concepts with AI design challenges!

Summary

This session

explored several complex topics at the intersection of artificial intelligence,

cognitive science, and information theory. Here's a summary of the key points

discussed:

- We began by comparing human

memory processes to AI systems, noting that while humans remember through

associative learning, AI systems like large language models process

information differently, encoding knowledge in neural network parameters.

- We discussed the concept of the

AI "black box" in relation to human memory, highlighting

similarities in complexity and opacity, but differences in learning

mechanisms and adaptability.

- We explored the notion of AI

creativity, concluding that AI can demonstrate creativity within its

trained parameters, although it differs from human creativity in nature

and scope.

- We examined the relationship

between AI pattern recognition and Gestalt theory, noting that while AI

doesn't explicitly use Gestalt principles, there are some conceptual

parallels in holistic processing.

- We defined an "AI

Gestalt" or "Gestalt's AI" concept, outlining how AI

systems perceive and process patterns in a way that's analogous to, but

distinct from, human Gestalt perception.

- We discussed how AI processes

data both sequentially and in parallel, depending on the architecture,

task, and hardware.

- Finally, we explored an

insightful observation about how lost connections in sequential processing

could represent critical Gestalt-like patterns, highlighting the

importance of preserving holistic meaning in AI systems and the potential

for new approaches in sequence modelling.

This session

demonstrated the complex interplay between human cognitive concepts and AI

design, suggesting avenues for future research and development in creating more

robust and context-aware AI systems.

Comments

Post a Comment