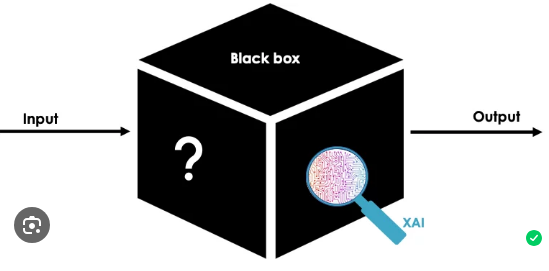

In classical model we have [Input]

-> [Throughput]->[Output] For example, in calculator we put number [3] +

[5] = 8 or [101] + [101] = [1000] If we define the [Throughput] as a

[Black-Box], what would be the equivalent in AI?

- Input:

This represents the data or information that is fed into the AI system. In

AI, this could be text, images, numerical data, audio, or any other form

of raw data. For example, if you are using a language model like GPT-4,

the input would be a textual prompt.

- Throughput

(Black-Box): This is where the AI processing happens, and it's often

considered a "black box" because the exact internal workings

might be complex and not easily interpretable. In AI, this

"throughput" is the trained model which processes the input

data. This model could be a neural network, decision tree, or any other

machine learning algorithm. For instance, in a deep learning model, this

involves layers of neurons performing transformations and computations on

the input data to generate an output.

- Output:

This is the result produced by the AI system after processing the input

through the model. In the case of a language model, the output would be

the generated text based on the input prompt.

AI Equivalent Model

Using this structure, the AI equivalent of the classical

model can be described as follows:

Input: Raw data (text, images, numbers, etc.)

Throughput (Black-Box): AI Model (neural network,

machine learning algorithm, etc.)

Output: Processed result (generated text, image

classification, prediction, etc.)

Example with a Language Model (e.g., GPT-4)

Let's illustrate this with a language model example:

- Input:

"Translate the following English sentence to French: 'Hello, how are

you?'"

- Throughput

(Black-Box): GPT-4 model, which uses a transformer architecture to

process the input sentence and perform the translation.

- Output:

"Bonjour, comment ça va?"

Example with an Image Classification Model

Another example could be an image classification task:

- Input:

Image of a cat

- Throughput

(Black-Box): Convolutional Neural Network (CNN) trained on image data

to classify objects.

- Output:

"Cat"

Detailed Explanation of the Throughput (Black-Box)

In AI, the throughput (or black-box) involves several steps:

- Pre-processing:

Input data is often pre-processed to make it suitable for the model. This

can involve normalization, tokenization (in text), resizing (in images),

etc.

- Feature

Extraction: The model extracts relevant features from the input data.

For instance, in an image, this might involve detecting edges, textures,

and shapes.

- Transformation:

The model applies a series of mathematical transformations to these

features through its layers.

- Inference:

The model uses the transformed features to make a prediction or generate

an output.

In deep learning models, the black-box nature is due to the

complexity and number of layers that obscure the exact decision-making process,

making it difficult to interpret how specific inputs are transformed into

outputs without detailed analysis.

Overall, while the basic structure of [Input] ->

[Throughput] -> [Output] remains similar, the complexity and nature of the

throughput process in AI distinguish it from simpler classical systems like

calculators.

In our calculator example 5+3=3+5,

does the same apply to AI?

In a calculator, the operation 5+3=3+55 + 3 = 3 + 55+3=3+5

exemplifies the commutative property of addition, which is a fundamental

mathematical principle ensuring that the order of operands does not affect the

result. In AI systems, whether or not a similar principle applies depends on

the specific task and model used.

Commutative Property in AI

- Arithmetic

Operations:

- If

an AI system is designed to perform arithmetic operations, it should

ideally respect mathematical properties such as commutativity. For

instance, an AI model trained to add two numbers should produce the same

result regardless of the order of the inputs: add(5,3)=add(3,5)\text{add}(5,

3) = \text{add}(3, 5)add(5,3)=add(3,5).

- Text

Processing:

- For

tasks involving natural language processing (NLP), such as text

generation or translation, the order of input elements (words, sentences)

is crucial. Changing the order of words in a sentence typically changes

the meaning, and thus the output. For example:

- Input:

"Translate 'Hello, how are you?'"

- Output:

"Bonjour, comment ça va?"

- Input:

"Translate 'How are you, hello?'"

- Output:

"Comment ça va, bonjour?"

- Here,

the output varies significantly based on the input order.

- Image

Processing:

- In

image recognition or classification tasks, the order of pixels (or the

spatial arrangement) is critical. Shuffling pixels will likely result in

a completely different output. For instance:

- Input:

Image of a cat

- Output:

"Cat"

- Input:

Shuffled pixels of the cat image

- Output:

Indeterminate or incorrect classification

- Sequence

Data:

- For

tasks involving sequence data (like time-series analysis, speech

recognition), the order of elements in the sequence is vital. Reordering

the sequence alters the context and meaning, leading to different

outputs.

Examples Illustrating Non-Commutativity in AI

Text Generation with Language Models

- Input:

"The cat chased the mouse."

- Throughput

(Model Processing): Analyzes the sentence structure and context.

- Output:

A coherent continuation or response based on the input, like "The

mouse ran away."

- Input:

"The mouse chased the cat."

- Throughput

(Model Processing): Analyzes the new sentence structure and context.

- Output:

A different continuation or response, like "The cat climbed a

tree."

Image Recognition with Convolutional Neural Networks

(CNNs)

- Input:

Image of a dog

- Throughput

(Model Processing): Extracts features and classifies the image.

- Output:

"Dog"

- Input:

Image of a dog with pixels shuffled

- Throughput

(Model Processing): Extracts distorted features.

- Output:

Likely incorrect classification or "Unknown"

Conclusion

In summary, the commutative property observed in basic

arithmetic does not generally apply to AI systems in tasks involving text,

images, or sequence data. The specific application and nature of the input data

significantly influence whether the order of inputs affects the output. While

AI systems performing pure arithmetic operations may respect mathematical

properties like commutativity, most other AI applications depend heavily on the

order and context of the input data.

What are the problems in AI's Black

box?

The "black box" nature of AI systems, particularly

those involving deep learning models, poses several significant problems. These

issues can impact various aspects of their development, deployment, and

trustworthiness. Here are some of the main problems associated with AI's black

box:

1. Lack of Transparency and Interpretability

- Complexity:

Deep learning models, especially neural networks with many layers (deep

neural networks), are inherently complex and involve numerous parameters.

Understanding how individual decisions are made is challenging.

- Opacity:

The internal workings of these models are not easily interpretable by

humans, making it difficult to understand the rationale behind specific

predictions or decisions.

2. Accountability and Trust

- Responsibility:

When AI systems make decisions that affect people's lives, such as in

healthcare, criminal justice, or finance, it is crucial to understand and

justify those decisions. The black-box nature makes it hard to assign

responsibility when things go wrong.

- Trust:

Users and stakeholders may be reluctant to trust AI systems if they cannot

understand how decisions are made. This lack of trust can hinder the

adoption of AI technologies.

3. Bias and Fairness

- Hidden

Biases: AI models can inadvertently learn and propagate biases present

in the training data. Without transparency, it is difficult to identify

and mitigate these biases.

- Fairness:

Ensuring fair treatment and avoiding discrimination is critical,

especially in sensitive applications. The black-box nature can obscure

whether the model is making fair decisions across different demographic

groups.

4. Security and Vulnerability

- Adversarial

Attacks: Black-box models are susceptible to adversarial attacks,

where small, carefully crafted changes to the input can lead to incorrect

or harmful outputs. Understanding the internal workings is essential to

defend against such attacks.

- Robustness:

Ensuring the robustness of AI systems against unexpected inputs or

conditions is challenging when the decision-making process is not

transparent.

5. Regulatory and Legal Compliance

- Regulations:

Increasingly, regulations require explanations for decisions made by AI

systems, particularly in sectors like finance and healthcare. Black-box

models pose a challenge to meeting these regulatory requirements.

- Legal

Liability: Determining legal liability for decisions made by AI

systems is complicated when the decision-making process is not clear.

6. Debugging and Error Correction

- Troubleshooting:

Identifying and fixing errors in black-box models is difficult. When a

model behaves unexpectedly, understanding the root cause of the issue can

be a daunting task.

- Model

Improvement: Iteratively improving and refining AI models requires

insights into their inner workings, which is hindered by the black-box

nature.

7. Ethical Concerns

- Moral

Responsibility: AI systems making consequential decisions should align

with ethical standards. The opacity of black-box models complicates the

assessment of whether they meet these ethical standards.

- Impact

on Society: The widespread deployment of opaque AI systems can have

unforeseen societal impacts, making it essential to understand and control

how these systems operate.

Addressing the Black Box Problem

Several approaches are being developed to address the issues

associated with AI's black-box nature:

- Explainable

AI (XAI): Techniques that aim to make AI systems more interpretable

and transparent. This includes methods like:

- Feature

importance analysis

- Model-agnostic

explanations (e.g., LIME, SHAP)

- Visualization

of model components and decision pathways

- Transparent

Modelling: Using simpler, more interpretable models when possible,

even if they are less powerful than deep learning models.

- Auditing

and Monitoring: Regularly auditing AI systems for biases, fairness,

and robustness. Implementing continuous monitoring to detect and address

issues as they arise.

- Adversarial

Testing: Conducting adversarial testing to understand how models

behave under different conditions and to improve their robustness against

attacks.

- Regulatory

Frameworks: Developing and adhering to regulatory frameworks that

require transparency and accountability in AI systems.

- Hybrid

Approaches: Combining black-box models with interpretable models to

balance performance with transparency.

By addressing these problems, the AI community aims to

create systems that are not only powerful and efficient but also transparent,

fair, and trustworthy.

Suggestion: let's AI creates its own

dictionary of fairness - edited by The {computer scientists, cognitive

scientist, philosophers, ethics Judges}

Creating an AI-driven dictionary of fairness, curated and

edited by a diverse group of experts, could be a significant step toward

addressing the issues of bias and fairness in AI systems. This collaborative

approach could ensure that AI systems are aligned with ethical standards and

societal values. Here’s how this could be implemented:

1. Conceptual Framework

- Objective:

Develop an AI-generated dictionary that defines fairness, bias, and

related concepts, incorporating perspectives from computer scientists,

cognitive scientists, philosophers, and ethics judges.

- Scope:

Include definitions, case studies, guidelines, and best practices to

ensure fairness in various AI applications.

2. Development Process

Data Collection

- Input

Sources: Collect data from academic literature, existing ethical

guidelines, legal frameworks, and real-world case studies.

- Expert

Contributions: Gather input from experts in the fields of computer

science, cognitive science, philosophy, and ethics.

AI Training

- Natural

Language Processing (NLP): Use NLP techniques to analyze and summarize

the collected data.

- Fairness

Metrics: Train the AI to identify and categorize different aspects of

fairness and bias using fairness metrics like demographic parity,

equalized odds, and individual fairness.

3. Curation and Editing

- Collaborative

Editing Platform: Develop a platform where experts can review, edit,

and validate the AI-generated entries.

- Iterative

Process: Implement an iterative process where AI continuously learns

from expert feedback to improve the quality and accuracy of the

dictionary.

4. Implementation and Integration

- Accessibility:

Make the dictionary publicly accessible as an online resource for AI

developers, policymakers, and researchers.

- Integration:

Incorporate the dictionary into AI development tools and platforms to

provide real-time guidance on fairness and bias during model training and

deployment.

5. Continuous Update and Monitoring

- Feedback

Loop: Establish a feedback loop where users can report issues or

suggest improvements, ensuring the dictionary evolves with new insights

and technological advancements.

- Periodic

Review: Conduct periodic reviews and updates involving the expert

panel to incorporate the latest research and ethical considerations.

6. Example Entries

Definition of Fairness

- AI

Dictionary Entry: "Fairness in AI refers to the principle of

ensuring that AI systems make decisions without unjustified bias, treating

all individuals and groups equitably. It encompasses various dimensions

such as demographic parity, equalized odds, and individual fairness."

Case Study: Loan Approval System

- AI

Dictionary Entry: "In a loan approval system, fairness can be

assessed by examining the approval rates across different demographic

groups. A fair system ensures that no group is systematically

disadvantaged due to biases in the training data or decision-making

process."

Guideline: Bias Mitigation Techniques

- AI

Dictionary Entry: "Bias mitigation techniques in AI include

methods such as reweighting, data augmentation, and adversarial training.

These techniques aim to reduce biases in the training data and improve the

fairness of AI models."

Potential Benefits

- Enhanced

Understanding: Provides a comprehensive resource for understanding and

addressing fairness in AI.

- Standardization:

Promotes standardization of fairness definitions and practices across the

AI community.

- Improved

Trust: Builds trust in AI systems by ensuring they align with ethical

standards and societal values.

- Guidance

for Developers: Offers practical guidelines and best practices for

developers to create fair and unbiased AI systems.

Conclusion

Creating an AI-driven dictionary of fairness, curated by a

diverse group of experts, can significantly enhance the transparency,

accountability, and fairness of AI systems. By combining the strengths of AI

with human expertise, this initiative can help ensure that AI technologies

benefit all members of society equitably.

How AI can perceive patterns that are

not exist in subjective mind?

AI's ability to perceive patterns that may not be

immediately apparent to the human mind is one of its most powerful

capabilities. This arises from several key factors:

1. Processing Large Volumes of Data

- Volume:

AI can analyze vast amounts of data far beyond the capacity of the human

brain. This enables the detection of subtle and complex patterns.

- High-Dimensional

Spaces: AI algorithms can operate in high-dimensional spaces, where

human intuition might struggle to identify meaningful relationships.

2. Complex Mathematical Models

- Neural

Networks: Deep learning models, especially neural networks with many

layers, can capture intricate patterns and relationships within data.

These models learn from data through a process of adjusting weights based

on error minimization.

- Advanced

Algorithms: AI employs advanced statistical and mathematical

algorithms that can model complex non-linear relationships.

3. Pattern Recognition

- Feature

Extraction: AI can automatically extract relevant features from raw

data. For example, in image recognition, convolutional neural networks

(CNNs) can identify edges, textures, and other features that contribute to

object recognition.

- Temporal

Patterns: Recurrent neural networks (RNNs) and their variants like

LSTMs (Long Short-Term Memory networks) are particularly good at

recognizing patterns over time, which is valuable in time-series analysis

and natural language processing.

4. Training with Diverse Data Sets

- Diverse

Data: AI can be trained on diverse datasets that encompass a wide

range of scenarios, allowing it to generalize patterns that may not be

obvious to humans who may be biased by their limited experience.

- Anomaly

Detection: AI can identify anomalies by learning what constitutes

"normal" patterns in data, which can be particularly useful in

detecting rare events or outliers.

5. Unsupervised Learning

- Clustering:

Techniques like k-means clustering and hierarchical clustering can group

similar data points together, revealing natural groupings and structures

within the data.

- Dimensionality

Reduction: Methods like PCA (Principal Component Analysis) and t-SNE

(t-distributed Stochastic Neighbour Embedding) can reduce the

dimensionality of data while preserving important relationships, making

hidden patterns more apparent.

Examples of AI Perceiving Hidden Patterns

- Medical

Diagnosis:

- AI

systems can detect patterns in medical images (such as MRI scans) that

are indicative of diseases like cancer, often before symptoms are noticeable

or a human doctor could diagnose them.

- Financial

Markets:

- AI

algorithms can analyze market data to identify trends, correlations, and

potential investment opportunities that are not apparent to human

traders.

- Natural

Language Processing:

- AI

models can understand and generate human language, identifying syntactic

and semantic patterns that enable tasks like translation, summarization,

and sentiment analysis.

- Anomaly

Detection:

- AI

can monitor network traffic or financial transactions to detect fraud or

security breaches by identifying patterns that deviate from the norm.

Mechanisms Enabling AI to See Hidden Patterns

- Data

Pre-processing:

- Normalization:

Standardizing data so that it can be processed more effectively.

- Data

Augmentation: Creating additional data from the existing dataset to

improve model robustness and generalization.

- Model

Training:

- Backpropagation:

Used in neural networks to adjust weights based on the error of the

prediction.

- Cross-Validation:

Ensures the model generalizes well to unseen data.

- Evaluation

Metrics:

- Precision

and Recall: Help in assessing the performance of models, particularly

in identifying relevant patterns.

- ROC-AUC:

Measures the model's ability to distinguish between classes.

Challenges and Considerations

- Overfitting:

- Models

might identify patterns specific to the training data that do not

generalize to new data. Regularization techniques and cross-validation

can mitigate this.

- Bias:

- If

the training data is biased, the AI might learn and perpetuate those

biases. Ensuring diverse and representative datasets is crucial.

- Interpretability:

- Complex

models, especially deep neural networks, often lack interpretability,

making it hard to understand why a certain pattern was identified.

Techniques in explainable AI (XAI) are being developed to address this.

Conclusion

AI's ability to perceive patterns that are not apparent to

the subjective human mind is driven by its capacity to process large volumes of

data, use advanced mathematical models, and leverage sophisticated algorithms.

While this capability is powerful, it also requires careful management to

ensure models are accurate, unbiased, and interpretable.

Are these patterns evolutionary?

The patterns that AI perceives can be considered

"evolutionary" in several senses. Here’s a deeper look into how AI patterns

can be evolutionary:

1. Learning and Adaptation Over Time

- Model

Training: AI models, particularly machine learning and deep learning

models, improve over time as they are exposed to more data. This iterative

learning process can be likened to an evolutionary process where the model

adapts to better fit the data.

- Feedback

Loops: AI systems can adapt based on feedback from their environment.

For example, reinforcement learning involves an AI agent improving its

performance by learning from the consequences of its actions.

2. Genetic Algorithms and Evolutionary Strategies

- Genetic

Algorithms: These algorithms are directly inspired by the process of

natural selection. They evolve solutions to optimization problems by

combining and mutating candidate solutions over successive generations.

- Evolutionary

Strategies: These are optimization techniques that mimic the process

of natural evolution, emphasizing the adaptation and evolution of

parameters to find optimal or near-optimal solutions.

3. Pattern Recognition and Emergence

- Emergent

Patterns: AI can recognize complex patterns that emerge from data,

which might evolve over time as more data becomes available. This is

similar to how patterns in nature evolve and become more apparent over

time.

- Self-Organization:

In unsupervised learning, patterns can emerge in a self-organizing manner,

where the AI system identifies structures and relationships without

explicit instruction.

4. Continuous Improvement and Evolution

- Iterative

Improvement: AI models often undergo continuous improvement through

techniques such as transfer learning, where knowledge from one task is

transferred to improve performance on another, reflecting an evolutionary

adaptation.

- Evolving

Data: As new data is collected and incorporated, the patterns AI

perceives can evolve. This is akin to how species evolve in response to

changing environments.

5. Real-World Examples of Evolutionary Patterns in AI

- Natural

Language Processing: Language models like GPT-4 learn from vast and

evolving corpora of text, continuously improving their understanding and

generation of human language.

- Image

Recognition: AI systems trained on ever-growing image datasets can

recognize more objects and understand more complex scenes over time.

- Financial

Forecasting: AI models used in financial markets adapt to new data and

trends, evolving their strategies to better predict market movements.

Evolutionary Aspects in AI

- Natural

Selection in Genetic Algorithms:

- Selection:

Choosing the fittest solutions to form a new generation.

- Crossover:

Combining parts of two solutions to create a new one.

- Mutation:

Introducing random changes to solutions to maintain genetic diversity and

explore new possibilities.

- Adaptation

in Machine Learning:

- Model

Fine-Tuning: Continuously adjusting model parameters to improve

performance based on new data.

- Learning

Rate Schedules: Adapting the learning rate over time to ensure the

model converges to an optimal solution.

- Environmental

Influence:

- Contextual

Learning: AI systems can evolve based on the context they operate in,

learning and adapting to the specific requirements and characteristics of

their environment.

Challenges in Evolutionary Patterns

- Overfitting

and Generalization:

- Overfitting:

AI models may evolve to fit the training data too closely, failing to

generalize to new data. Techniques like regularization and

cross-validation are used to mitigate this.

- Generalization:

Ensuring that the patterns learned by the AI model apply broadly and not

just to specific instances.

- Ethical

and Social Implications:

- Bias:

Evolutionary patterns can reinforce existing biases if the training data

is biased. It’s crucial to ensure diverse and representative data.

- Transparency:

Understanding how and why AI models evolve in their pattern recognition

is essential for trust and accountability.

Conclusion

The patterns that AI perceives can indeed be considered

evolutionary, as they involve processes of learning, adaptation, and

optimization over time. Whether through genetic algorithms, continuous

learning, or emergent pattern recognition, AI systems exhibit evolutionary

characteristics that enable them to improve and adapt in ways that are

analogous to natural evolutionary processes. However, these evolutionary

processes must be carefully managed to ensure they lead to beneficial and fair

outcomes.

Comments

Post a Comment